Understanding Technical SEO

In my experience working with fashion e-commerce business owners, including designers and clothing brand managers, I've noticed a common theme: technical SEO often presents the most confusion and misunderstanding. My goal with this business is to take the mystery out of SEO, so that it’s more accessible to everyone.

As a fashion e-commerce business owner, you don't need to master every technical detail—there are skilled web developers and SEO experts for that. However, a basic understanding of important technical SEO aspects and terminology can significantly impact your business operations.

This foundational knowledge will help you feel more in control and ensure transparency when you hire professionals to enhance your site's SEO.

In this article, we'll examine the 11 essential elements of the technical SEO checklist, providing a clear, practical guide to each. The technical SEO checklist makes up one part of my comprehensive SEO Ranking Factors Checklist. Prepare for a technical SEO show-and-tell that will empower you to collaborate more effectively with your team and elevate your online presence.

You will learn:

- What is technical SEO?

- What is each item on the technical SEO checklist?

- Definitions and example images

- Common issues amongst e-commerce websites

- Best practices and how the common issues are typically resolved

What is Technical SEO?

Technical SEO concentrates on refining the backend structure of your website to ensure that search engines like Google and Bing can efficiently crawl and index your web pages. It also addresses critical issues such as fixing errors, broken URLs, and redirects, auditing load speed, checking for toxic backlinks, and ensuring your site is mobile-friendly.

Why is technical SEO important to your business?

As an SEO specialist, I often come across websites where pages are not being properly crawled or indexed—even when they appear to be working fine on the surface. If search engines cannot crawl your website, it won’t appear in their search engine results—which means web users won’t find your site pages.

The bottom line? Loss of opportunities to make online sales.

When I start working with a new client, I always conduct a complete SEO site audit—a website health check that flags many key issues affecting the website’s visibility, including technical SEO issues.

What is the difference between Technical versus On-Page SEO?

I get this question a lot! While technical SEO deals with the backend structure, on-page SEO focuses on elements such as focus keywords, title tags, meta descriptions, headings, block text, blogs and internal links.

Technical SEO is one of the foundational aspects of SEO that needs to be addressed and improved before moving onto more advanced SEO strategies. To learn more about the three levels of a complete SEO strategy, refer to my Complete “How-To” Guide for E-Commerce SEO - From Beginner to Advanced.

What is on the Technical SEO Checklist?

To help ensure that your technical SEO is properly addressed and maintained, here is a handy checklist of 11 key areas to focus on. As you read this list, don’t be intimidated—I’ll explain each item in the next sections.

Technical SEO Checklist

- Crawling and Indexing

- Robots.txt

- XML sitemaps

- Schema markup (aka Structured data)

- HTTPS

- PageSpeed

- Mobile Friendliness

- Site Structure

- URL Structure

- Canonical Tags

- Broken links (404 and 301 redirects)

These items are included in my comprehensive complete SEO checklist that is downloadable here: SEO Ranking Factors Checklist

Now that you know the definition of technical SEO and the eleven key items on the checklist, let’s sink our teeth into each item to learn how they impact your site’s visibility, the common issues seen on e-commerce websites, and how those issues are typically resolved.

Crawling and Indexing

Check that pages you want visible in search engines are being crawled and indexed.

Google has automated software called search bots or spiders. These bots visit web pages in a process called “crawling the web”. Then they add the pages that they crawl to Google's index, which is like a huge catalog of trillions of web pages. Just think of it as a library where all these web pages are all catalogued and organized.

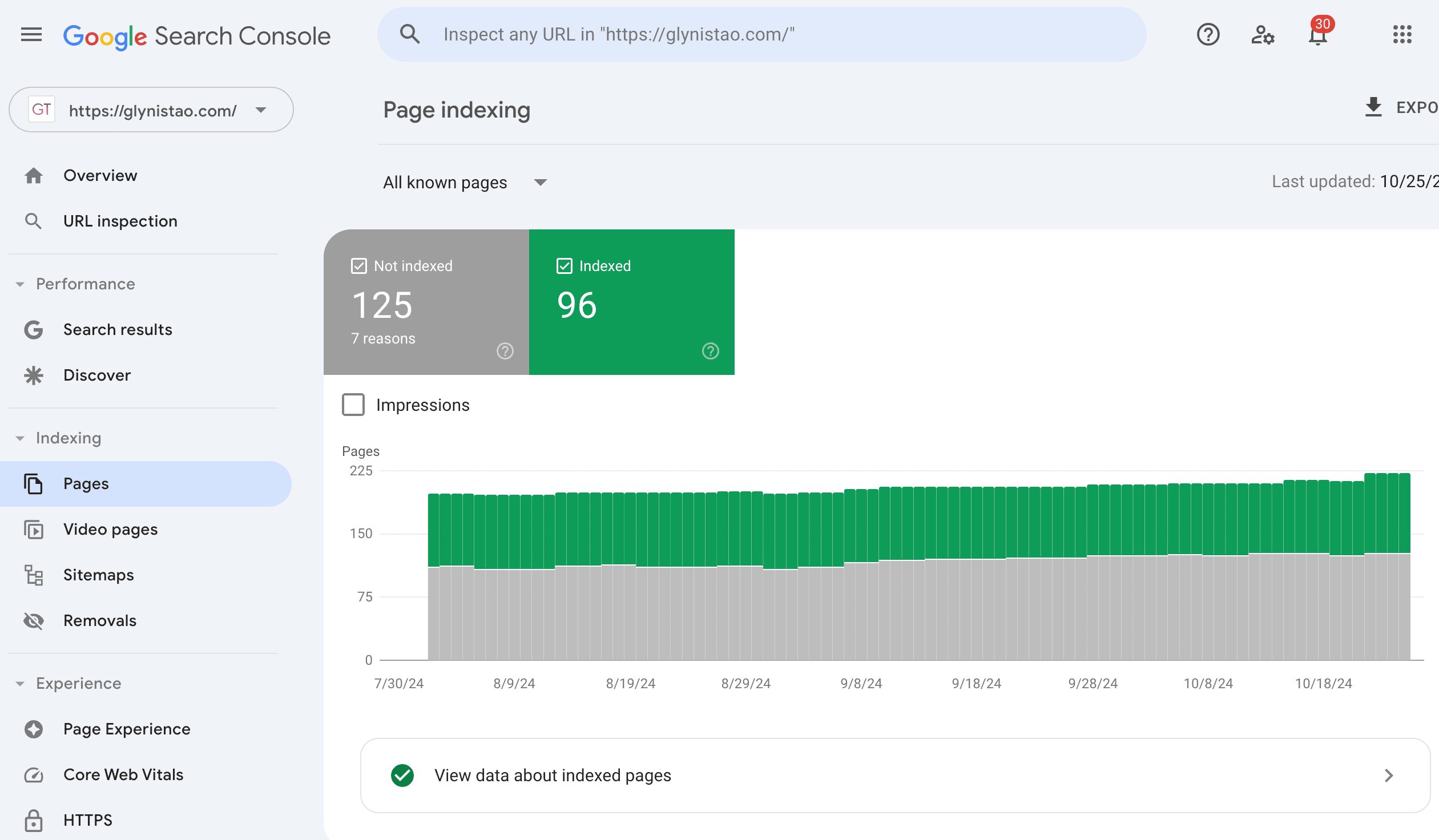

You can check if your website pages are being properly crawled and indexed by using the URL inspection tool in Google Search Console.

For the pages that you want the public to be able to search and find, you need to make sure that they are crawlable and indexable. There are various reasons preventing crawling and indexing and those include the subsequent items on the technical SEO checklist that you’ll learn about here.

Robots.txt

Make sure your robots.txt file is not blocking pages from being shown on the web.

A website’s robots.txt file tells search engines what pages can or cannot be crawled on a site.

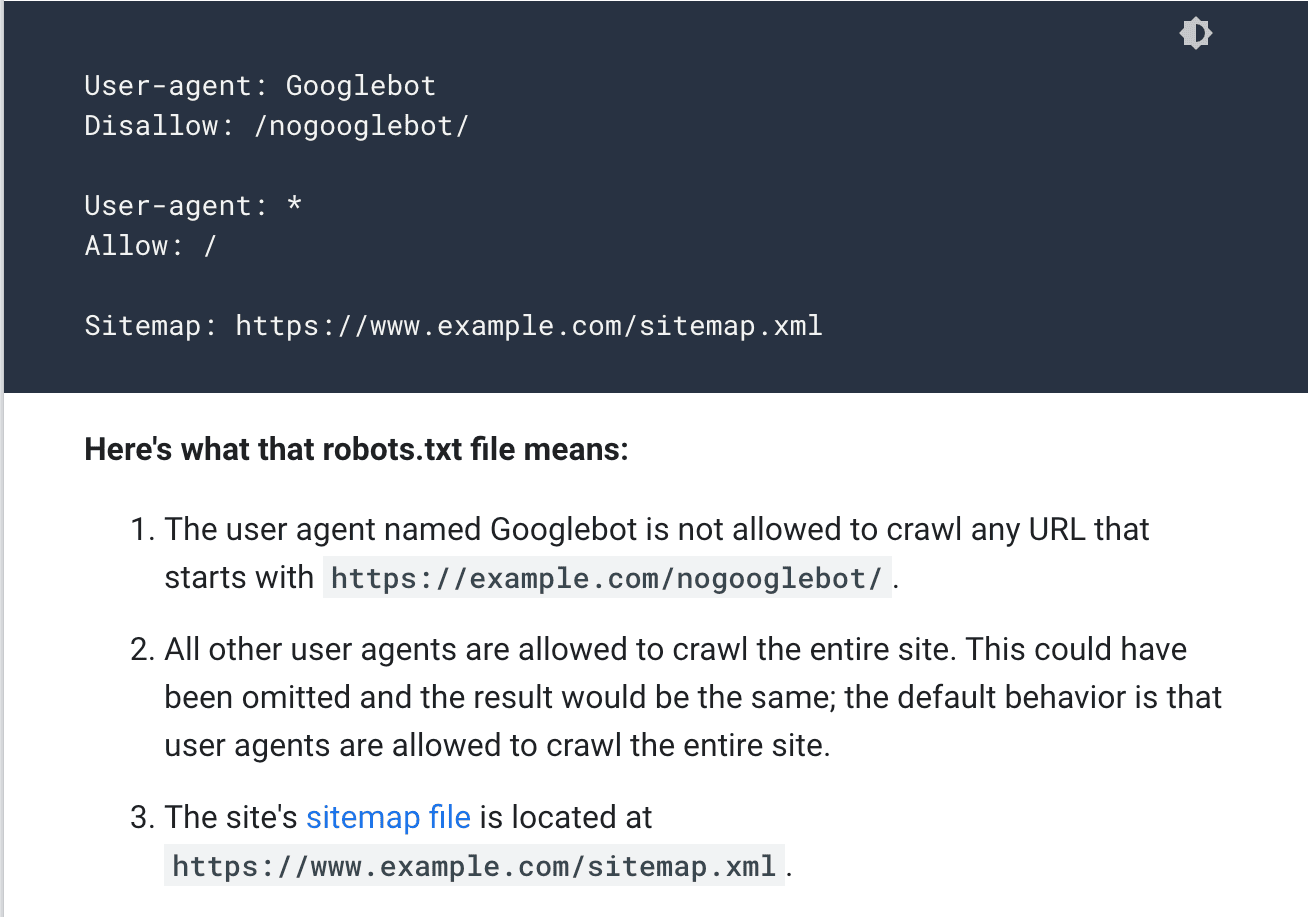

A robots.txt file is located at the root directory of your website. For instance, if your website is www.example.com, you would find the robots.txt file at www.example.com/robots.txt. This file, which is a plain text file adhering to the Robots Exclusion Standard, contains one or more rules. These rules either permit or restrict access to your website's content for various crawlers based on the specified file path within the domain or subdomain that hosts the robots.txt file. By default, if not explicitly mentioned in the robots.txt file, all content on the site is allowed to be crawled.

Below is an example of a simple robots.txt file containing two rules:

Example from Google Search Central

A good reason for blocking a page might be because there is private information on it that you don’t want the public to see. But sometimes, the instructions on your robots.txt might be inadvertently blocking pages that you want to be crawled and discovered by the public.

In the beginning stages of working with my SEO clients, we often discover these issues and address them as soon as possible, bringing in a web developer when needed. A typical Content Management System (CMS) such as Shopify will create a default robots.txt file that works for most sites, but you can also customize your robots.txt file. A web developer can help to ensure you have the proper robots.txt file configuration for your site.

You can read more in-depth information about robots.txt file on Google Search Central.

Links for further information:

Google Search Central: How to write and submit a robots.

Example from SEMrush: What is a robots.txt file?

XML Sitemaps

Submit your XML sitemap to Google Search Console for crawling and indexing.

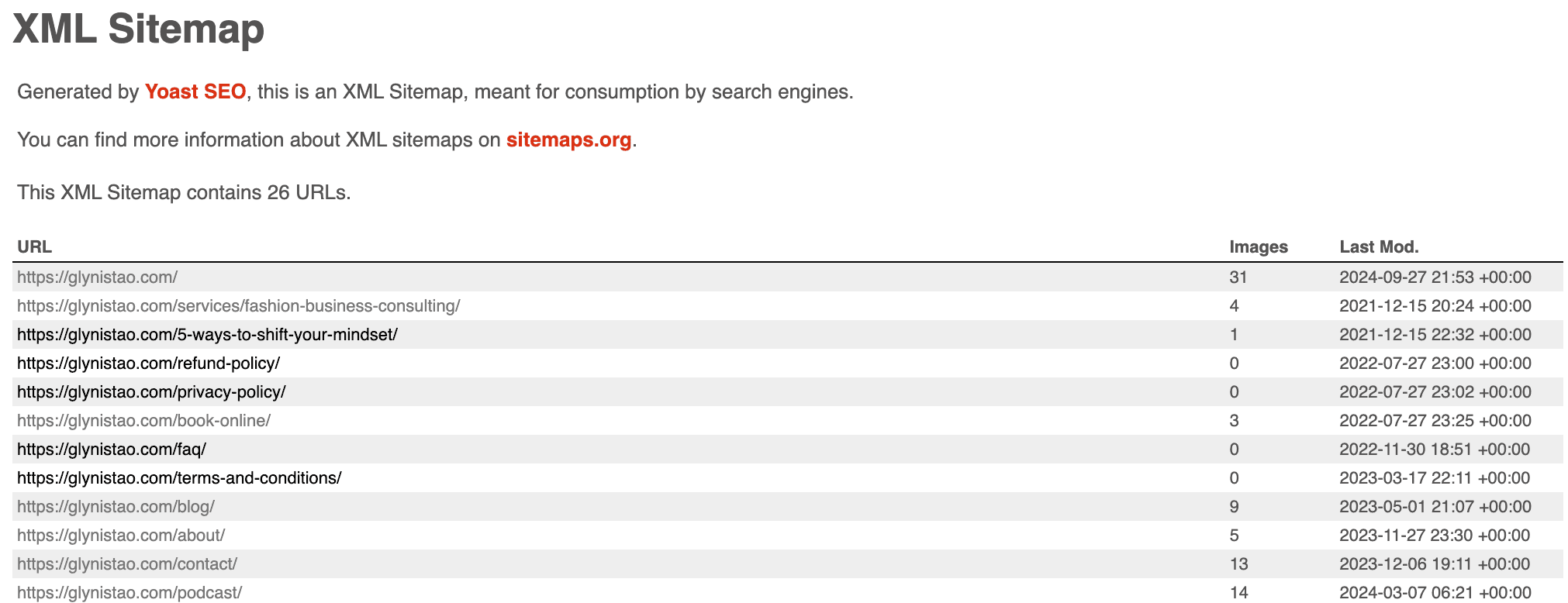

An XML sitemap is a file that helps Google discover your pages, triggering crawling and indexing. New sites might not be recognized by search engines if the sitemap is not properly submitted.

This is what a sitemap looks like:

This is an example of a sitemap that can be found at https://glynistao.com/sitemap.xml

Once the sitemap is submitted, Google’s crawlers can more efficiently catalog site content. For e-commerce platforms like Shopify, sitemaps simplify the process, but even WordPress sites benefit greatly by submitting an XML sitemap to ensure comprehensive site indexing. This submission can take up to 48 hours to reflect data in Search Console, so be patient and check back periodically to see how Google has processed the sitemap.

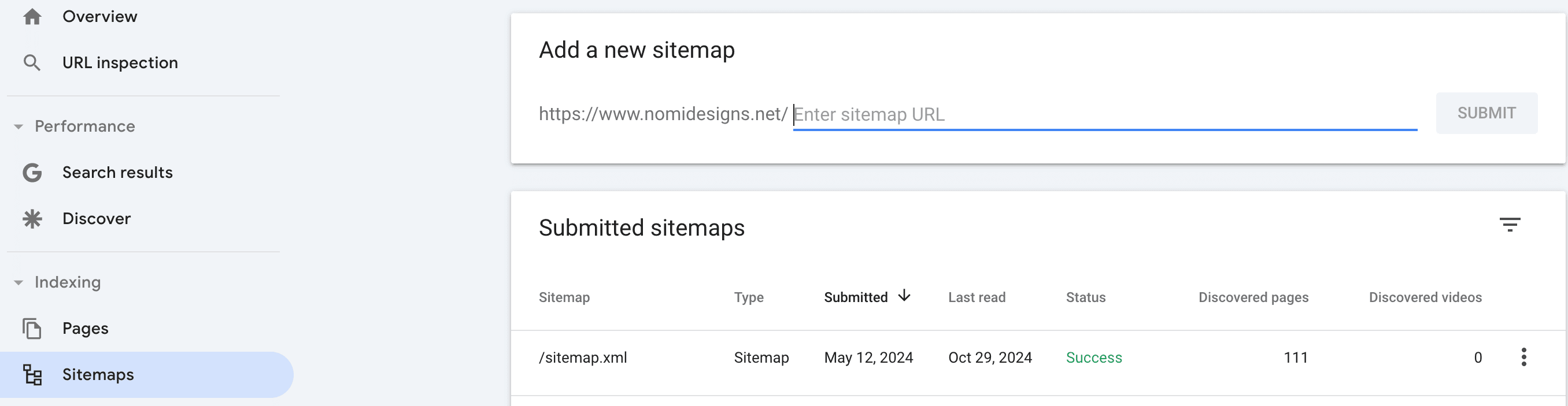

How to submit your site map

We will talk more about Google Search Console in a later blog, but for now in reference to how to submit your sitemap, when setting up the Google Search Console, you would go under sitemaps, type in the URL plus /sitemap.xml and submit.

Links for further information:

Google Search Central: Learn about sitemaps

Schema Markup (Structured Data)

Implement structured data to enhance understanding by search engines.

Schema markup or structured data is a technical language that websites use to tell search engines what your web pages are about. According to Google Search Central, “Google uses structured data that it finds on the web to understand the content of the page, as well as to gather information about the web and the world in general, such as information about the people, books, or companies that are included in the markup.”

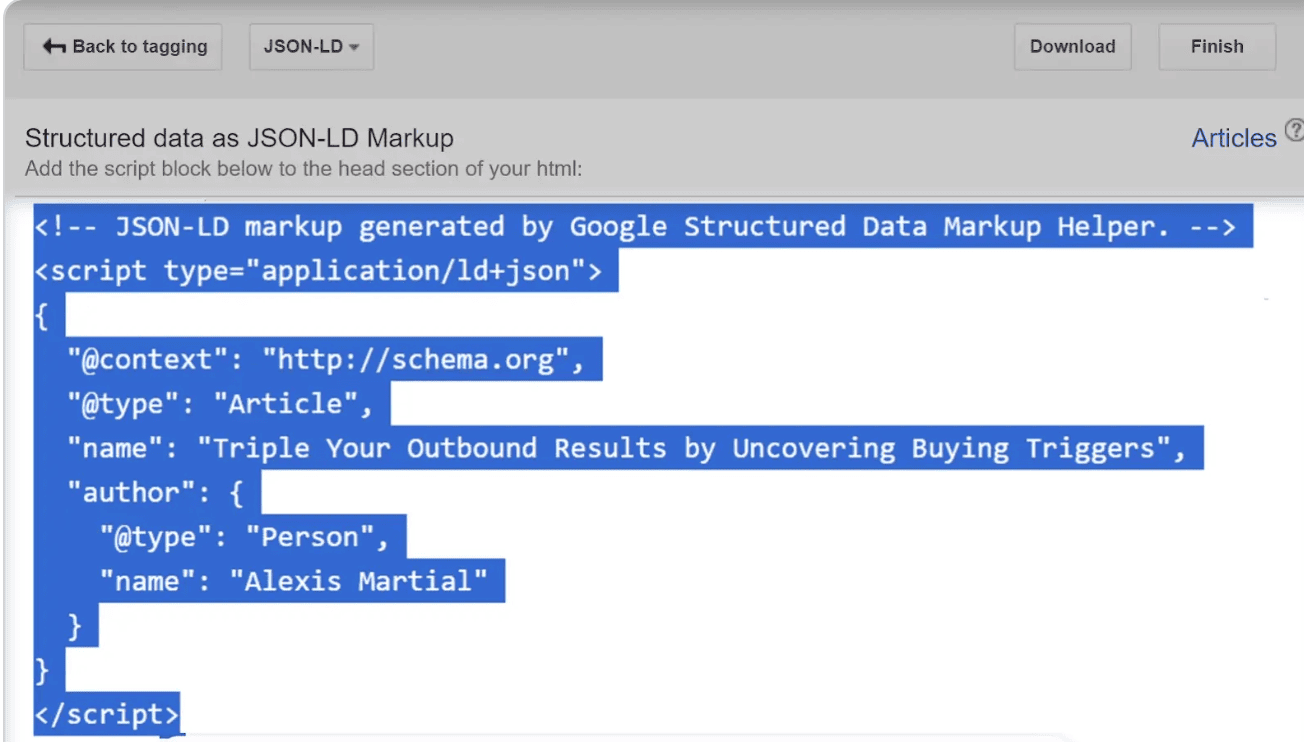

This is what a schema markup looks like:

Schema markup is usually added to the website code by a web developer to make search engines better understand the content. Without schema markup, search engines might not fully understand the context of your page content, which can impact your visibility. You can use structured data testing tools such as Schema Markup Validator to check if your schema markup is working properly.

Links for further information:

Introduction to structured data markup in Google Search

HTTPS

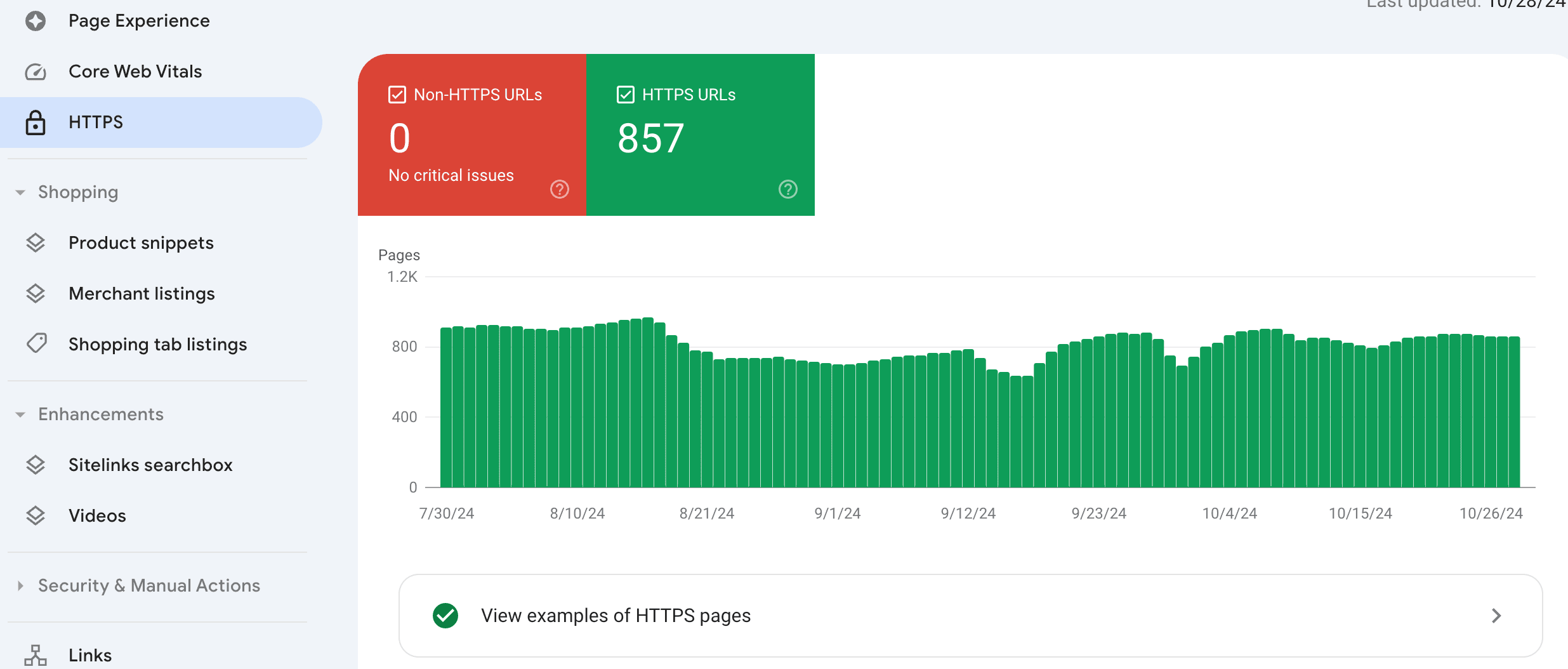

Ensure your website is secure with HTTPS.

HTTPS (HyperText Transfer Protocol Secure) is a secure version of HTTP that encrypts data between a browser and a website, protecting user privacy and security. It is especially important for sites handling sensitive information.

HTTPS is a ranking factor for search engines. Non-HTTPS sites may be labeled as "Not Secure," which can reduce trust and visibility in search results, as Google and other search engines prioritize secure sites. To secure your site, install an SSL certificate through your hosting provider. This enables HTTPS and ensures data protection and improved SEO performance.

Links for further information:

Cloudflare: Why is HTTP not secure?

Page Speed

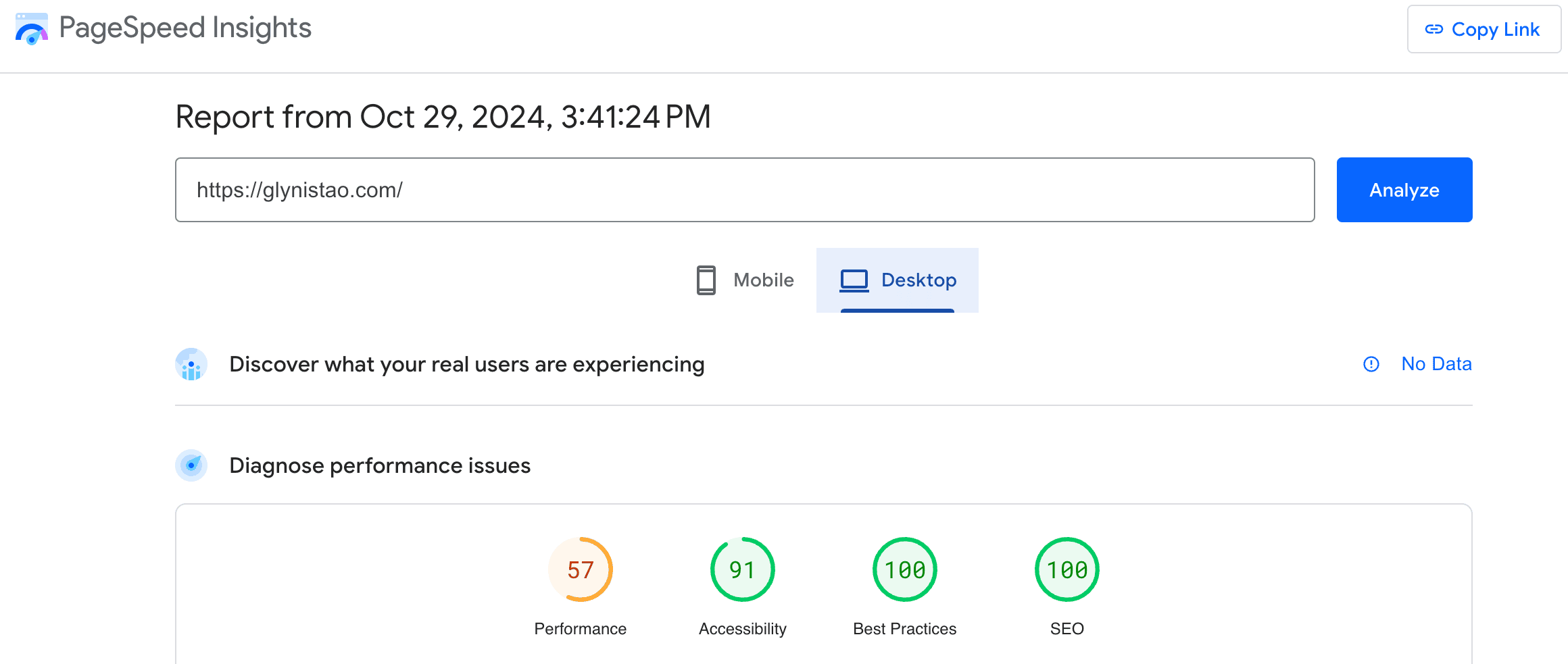

Check your site's load time and optimize images or reduce server response time to improve speed.

Page speeds refer to how quickly your website loads.

A slow site can lead to poor user experience, lower search engine rankings and increased bounce rates (percentage of visitors who land on a webpage and leave without taking any further action).

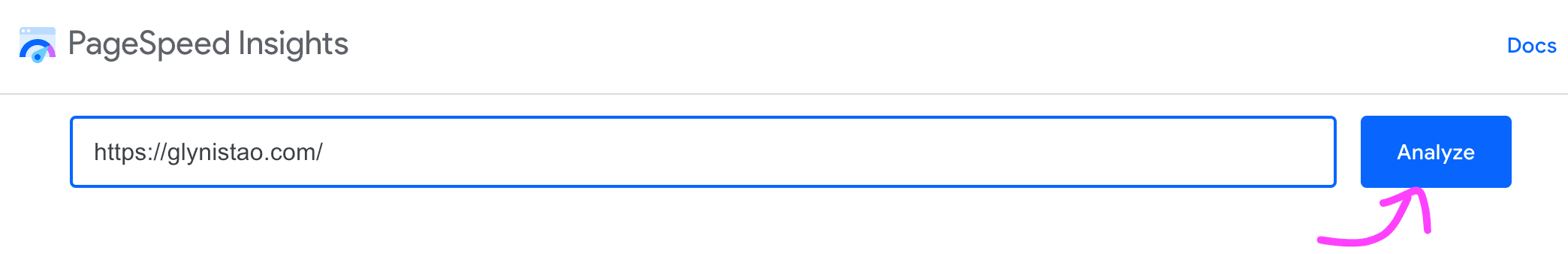

Use tools like Google PageSpeed Insights to identify and fix issues impacting load time.

Mobile Friendliness

Ensure your site is responsive across devices.

Mobile friendliness means your website adjusts to various screen sizes and is easy to use on mobile devices. With more mobile users than desktop, a non-mobile-friendly site can negatively affect rankings and user experience, so you need to make sure that your website is responsive, adjusting seamlessly to both mobile and desktop screens.

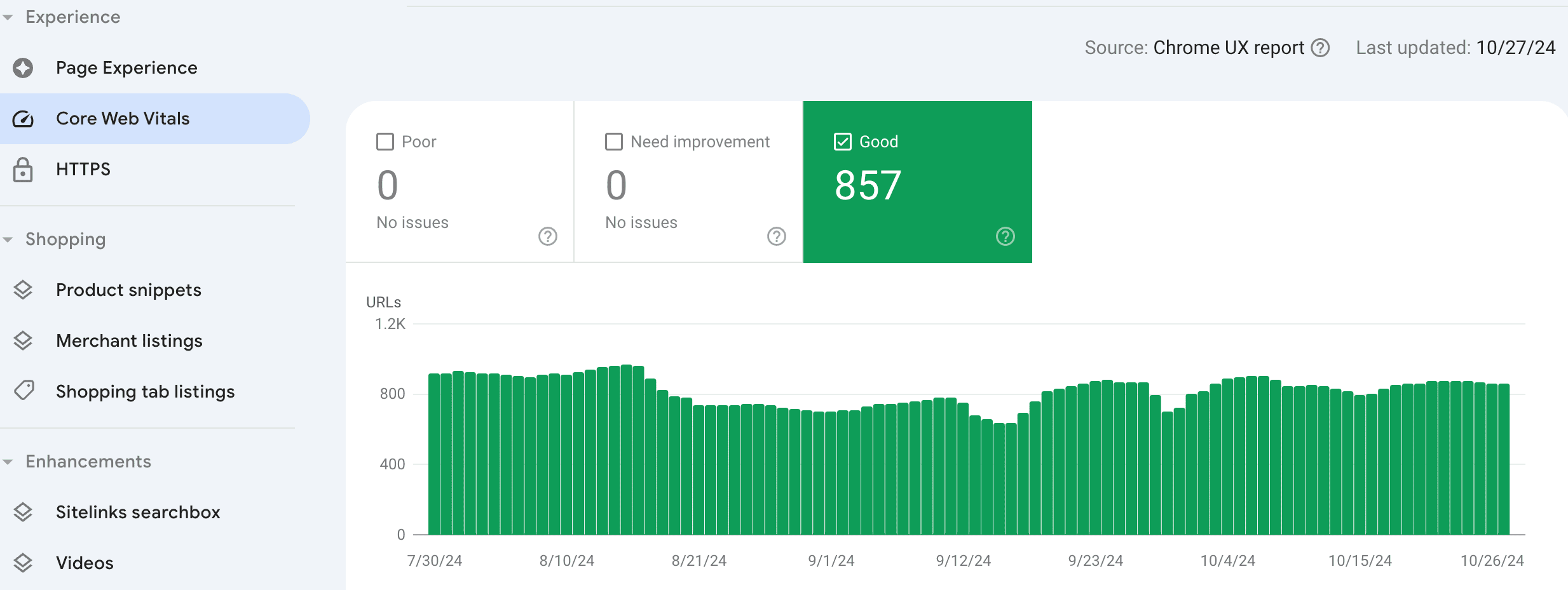

The Search Console Mobile Friendly testing tool can be found in Core Web Vitals inside your Google Search Console dashboard.

Site Structure

Create a clear logical structure for your site.

Website structure refers to how your pages are organized and linked within your website. Complex or unclear site structures can confuse both users and search engines when navigating your site. Google might have difficulty indexing important pages and this can impact your rankings.

I often encounter e-commerce websites with site structure issues that are overly complicated. My best advice is to keep things simple and predictable for the site user.

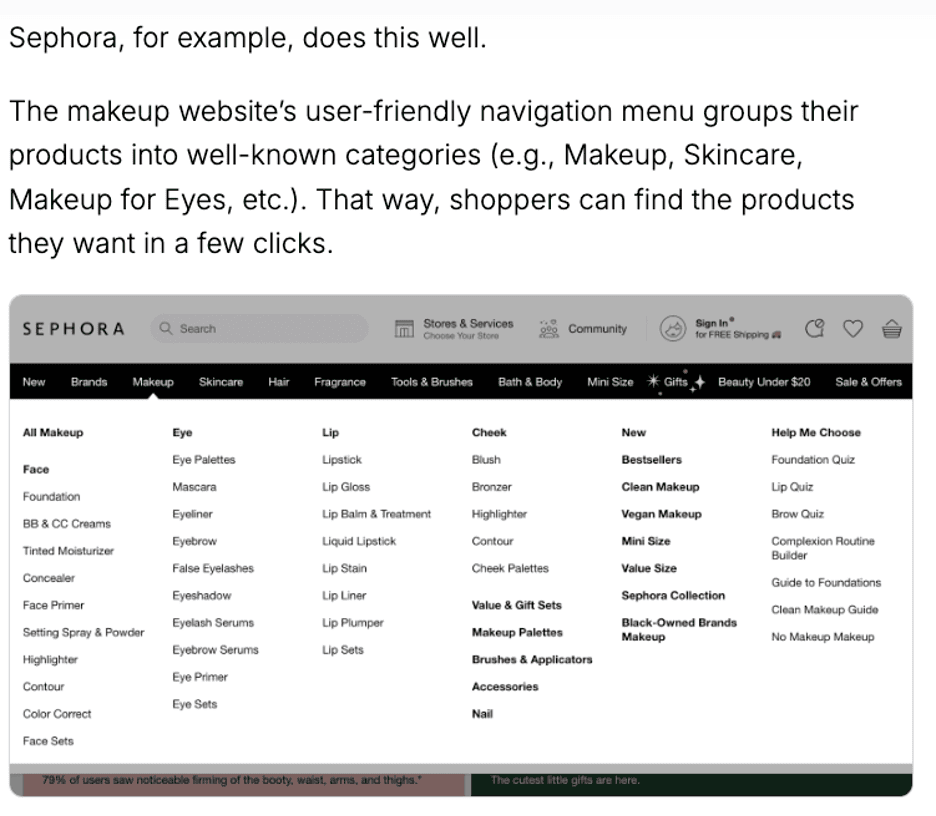

SEMrush advises that “you should plan your website’s structure so users can easily move down your marketing funnel by navigating to pages like products, services, and other important pages.”

Here is an example of Sephora’s structure provided by SEMrush:

You can use an audit tool like SEMrush to analyze your site structure and fix any issues.

Links for further information:

SEMrush: What is website architecture?

URL Structure

Simplify and optimize your URLs with relevant keywords.

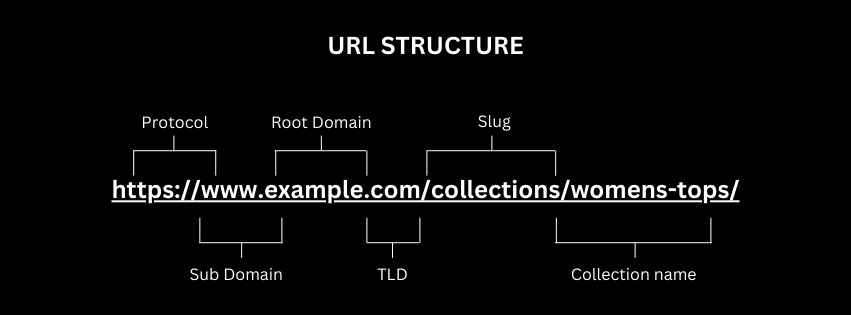

URL structure refers to how URLs are organized on your site, including domain and subfolders.

Your URLs should be simple, descriptive, and include relevant keywords so that search engines can easily understand what’s on those pages and index them accordingly. When I analyze my client site for which pages are ranking well for their target keywords, the urls that include the relevant keywords usually rank better.

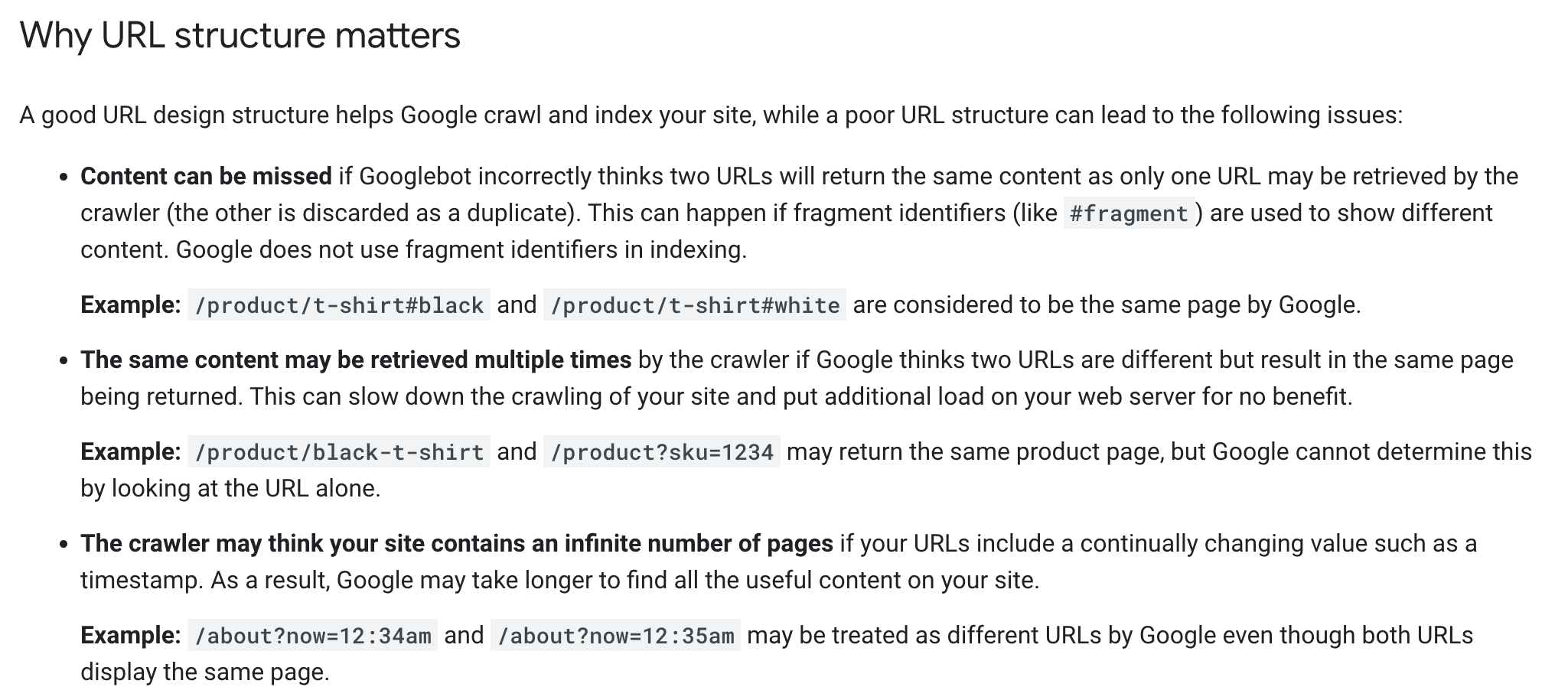

Examples of poorly structured URLs and the issues they can cause according to Google Search Central:

Poorly structured URLs can make it harder for search engines and users to understand what a page is about.

You should review your URLs to ensure they are concise and keyword-optimized.

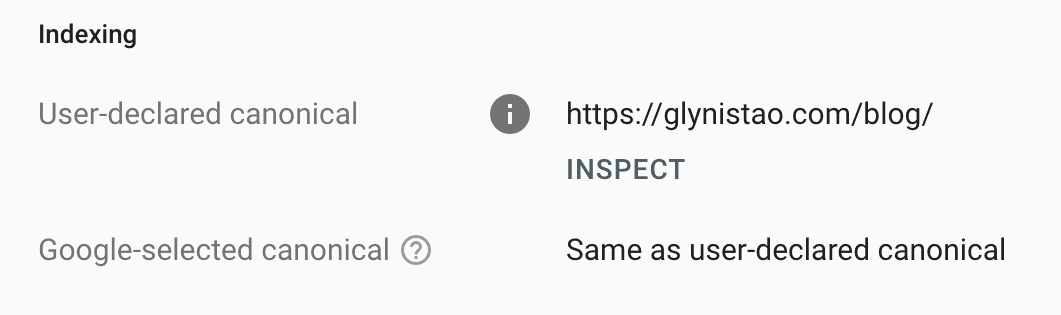

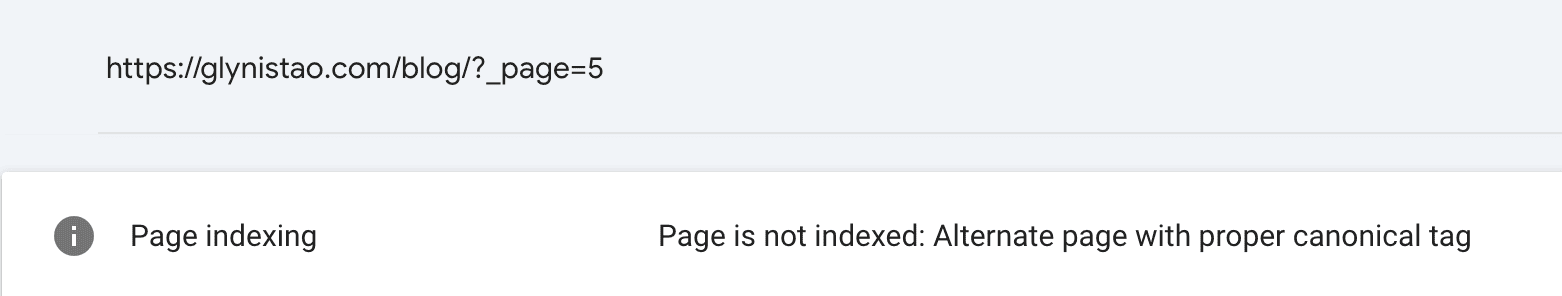

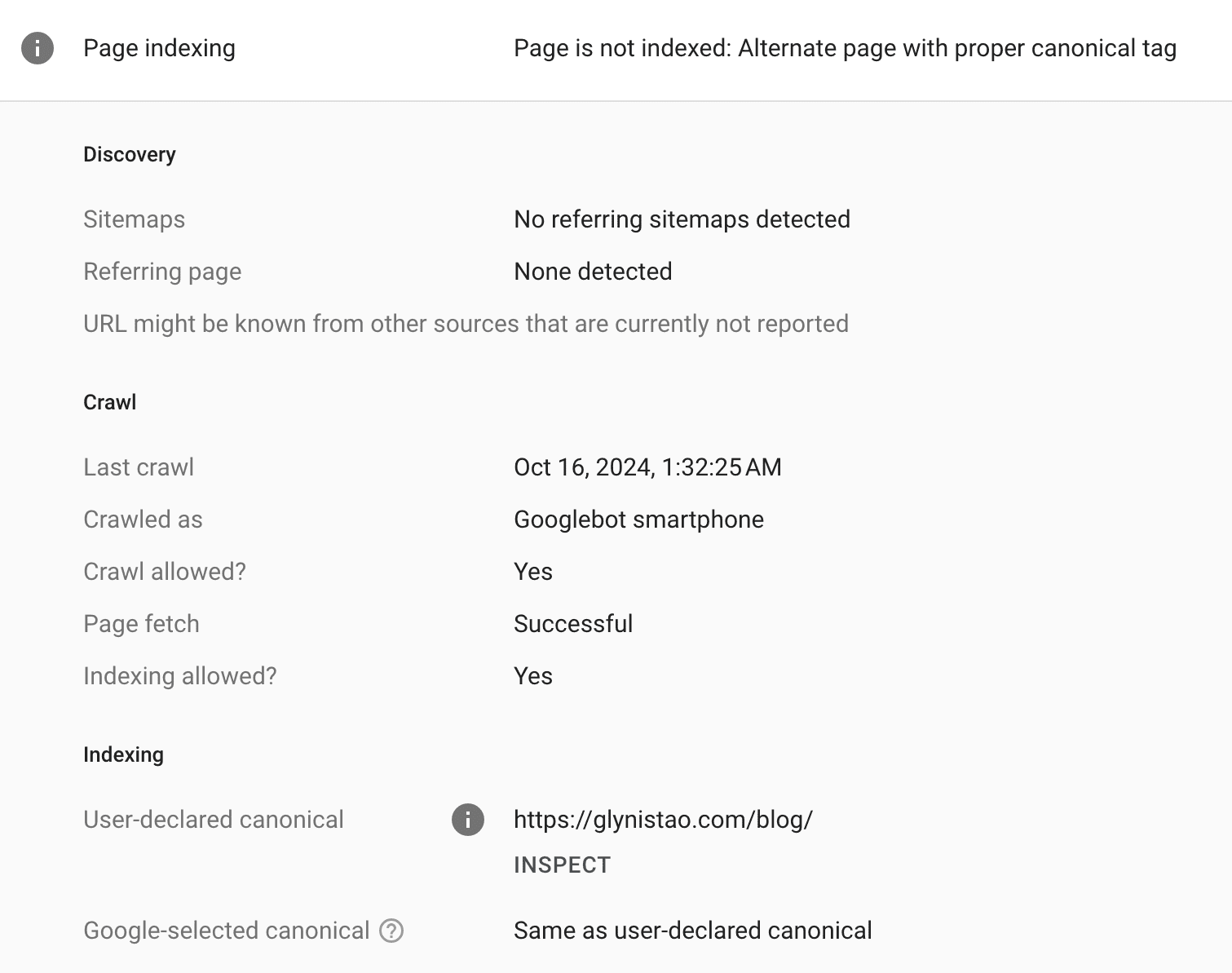

Canonical Tags

Use canonical tags to manage duplicate content.

Before I get into canonical tags, let’s first define what is a canonical URL versus a canonical tag.

A canonical url defined by SEMrush is “the version of a webpage chosen by search engines like Google as the main version when there are duplicates. And is prioritized to avoid showing repetitive content that doesn’t provide unique value in search results.”

Since Google doesn’t always choose the primary url that you want it to, you can use canonical tags to manage your site’s duplicate content.

A canonical tag guides search engines to a single “main” version of a page.

For example, if multiple URLs display similar products (like sorted or filtered variations), canonical tags help consolidate these into one authoritative page. This preserves SEO strength and avoids diluted rankings.

You can set up canonical tags by either manually by adding <link rel="canonical" href="https://example.com"/> to the <head> section of your page's code, but hardly anyone does that because it's too much hassle. It's usually way easier to do it through your website platform.

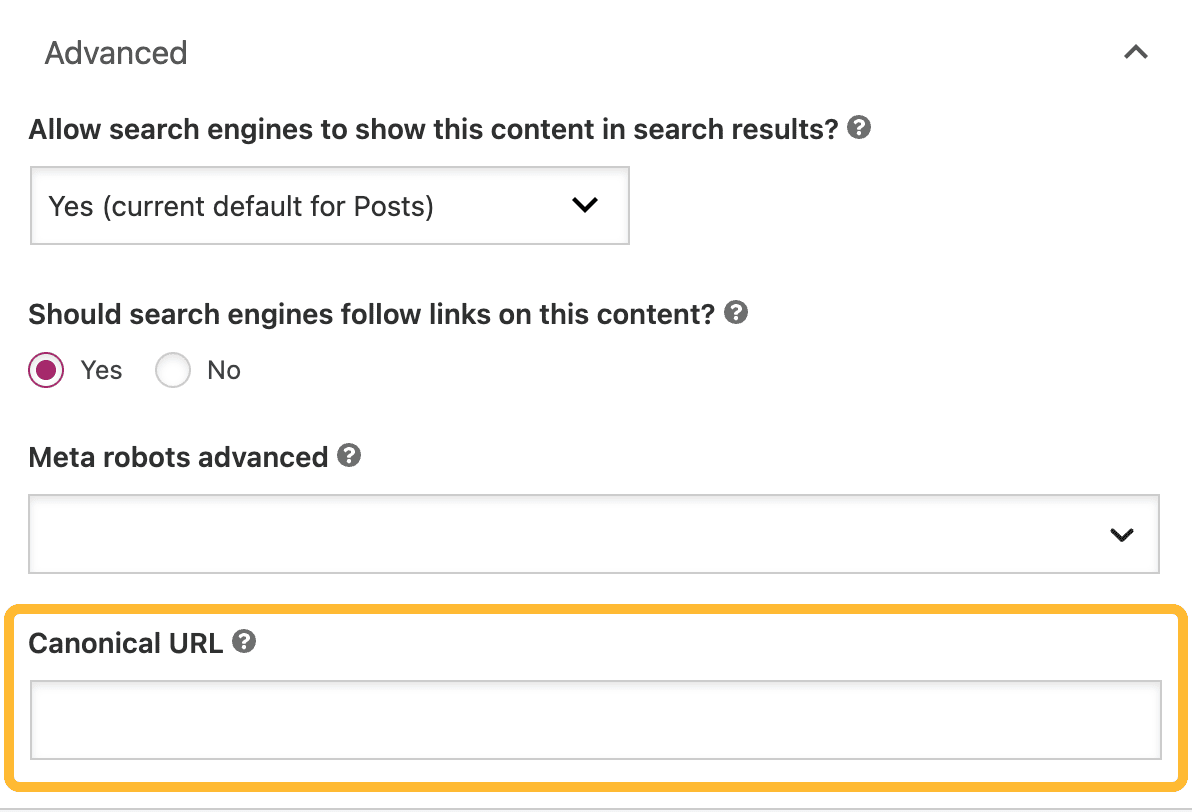

Setting canonical tags in WordPress

Install Yoast SEO, then scroll to the “Advanced” section on a page or post where you’ll see an option to specify a canonical.

Setting canonical tags in Shopify

Shopify generally manages canonicalization fairly well out of the box. But that doesn't mean it's always perfect. However, most small to medium-sized stores will likely not need to manually adjust canonical tags. If adjustments are necessary, I suggest enlisting the help of a web developer, since you'll have to modify the code in your theme's .liquid files directly.

You can use tools like Google Search Console or SEMrush to confirm your canonical tags are working effectively to keep search engines focused on the most relevant page.

Broken Links (404 and 301 redirects)

Repair broken links and set up 301 redirects for missing pages.

Broken links (often 404 errors) occur when pages are deleted, URLs are changed without redirects, or external sites remove linked content.

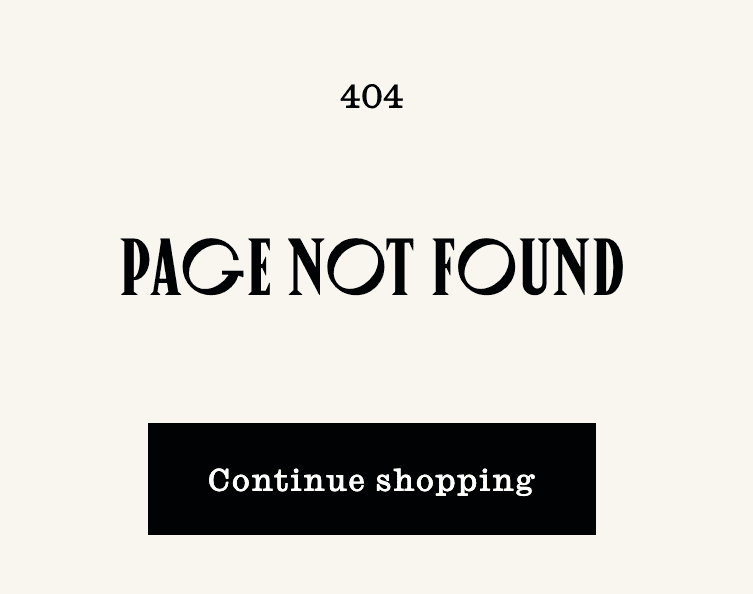

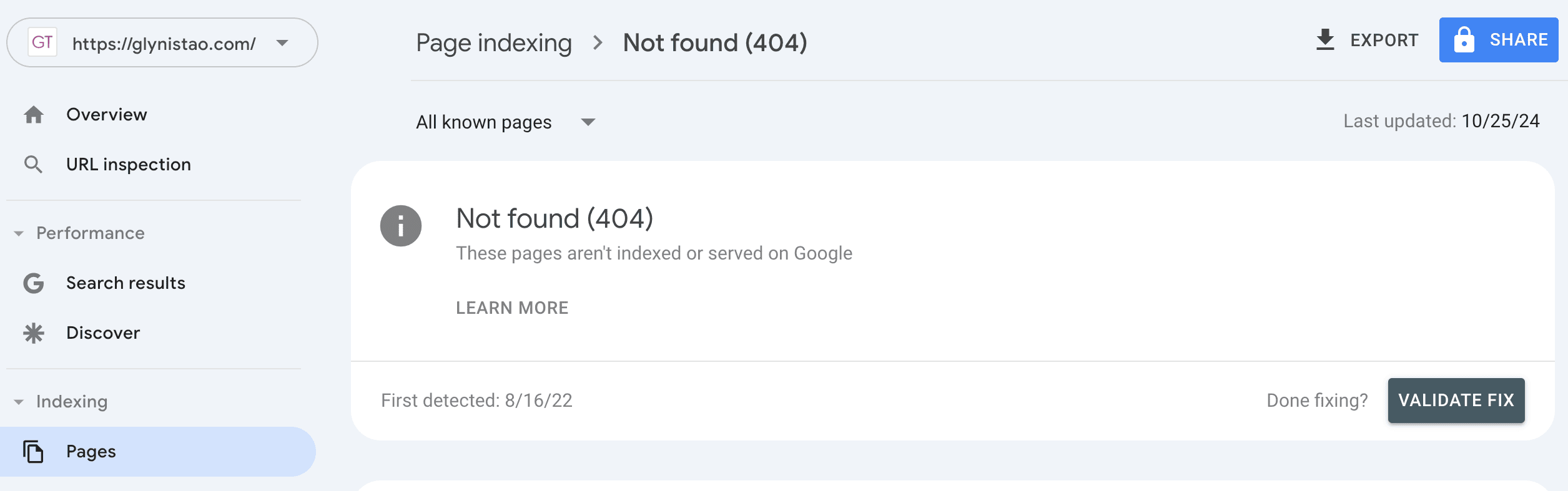

This is what a 404 page looks like. Does it look familiar?

Many websites have these issues, and the problem is search engines interpret broken links as a sign of poor maintenance, which can hurt rankings and deter potential customers. Be proactive in repairing broken links to improve your user experience and signal to search engines that your website is well-maintained.

Use tools like Google Search Console or SEMrush to scan your site for broken links. Update or redirect these links to active, relevant pages. For missing pages, set up 301 redirects (permanent redirection from one URL to another) to help retain any SEO authority previously linked to the original URL.

Congratulations—You made it through Technical SEO!

Wow! That was a lot of technical information. And if you’re not a “techy” person—I’m proud of you for sticking to the end of this article. Because these aren’t easy concepts to grasp.

I like to tell people that one of my purposes as an SEO specialist is to “take the mystery out of SEO” for fashion e-commerce business owners. So I hope this article has helped to open your eyes on the issues occurring on the backend of your site that could affect your organic site traffic and ultimately your sales.

For further reading check out these related articles:

The Complete “How-To” Guide for E-Commerce SEO - From Beginner to Advanced

How to run an SEO Website Audit for Fashion E-Commerce

Download my SEO ranking factors checklist to get started on improving your Technical SEO today!

Want to know how your website is performing and how you can improve your rankings on search engine results? Schedule a Website Audit Plus